This is a follow-up up to a previous post “Using HP iLO Scripting Tools for Windows PowerShell“. HP released an updated version 1.1 of their iLO Power Shell tools on 20 Mar 2014 .

Before I jump into the new release and what’s included in it I want to cover something I received a couple of comments and pings via Twitter about. Readers have asked how to download the installer. I’ll go over that here and then again at the end, as upgrades have been made easier with this release.

First off, I have to start by saying that the HP website along with the EMC PowerLink site continually trade places in my mind as the most difficult to navigate and link to. What I mean is, they both contain so much info that I often find it hard to navigate and linking to content is even more hit or miss. I’m never sure if a deeplink will work next time I need it.

Enough sidebar, the HP iLO PowerShell can be downloaded after a four step procedure:

One:

visit the vanity url http://hp.com/go/powershell and click the red [DOWNLOAD] button.

Two:

you should see the HP Support Center page. From there, click View and download all drivers, software and firmware for this product.

Three:

There are multiple version of the installer. Choose the one for your flavor of Windows 7/8/2012 in 32 or 64 bit:

Four:

After all that now you’re presented with the actual msi. Click [DOWNLOAD].

Run that MSI and let it do it’s thing. If you already have a previous version it will update the newest content and features.

Either way, when it’s finished pop open a new PowerShell session.

First lets check the version:

PS C:\>Get-HPiLoModuleVersion

Previously I wrote about the 1.0.0.0 release. As you can see this latest release is 1.1.0.0.

This latest release includes a few new CmdLets.

List the available CmdLets.

PS C:\>Get-Help *HPiLO*

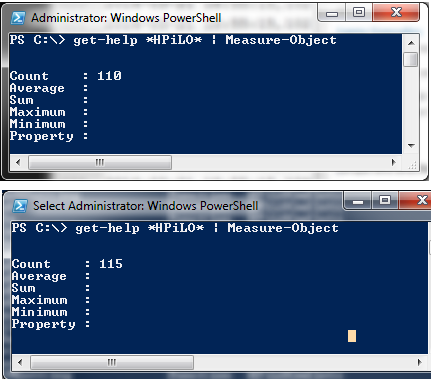

Side-by side we can see the new additions:

Previously there were 110 CmdLets available.

Throw a quick Measure-object on the pipeline and you can see a count includes 5 additional CmdLets.

While Get-*HPiLo seems to contain the same number, there are 3 new Set-*HPiLO CmdLets

There are also two new Update-*HPiLO Cmdlets are:

Update-HPiLOFirmware

DESCRIPTION

The Update-HPiLOFirmware cmdlet copies a specified file to iLO, starts the upgrade process, and reboots the iLO after the image has been successfully flashed. You must have Configure iLO Settings privilege to execute this command.

This should server useful as a method of pushing out iLo updates via PowerShell.

The other update CmdLet is what I referred to earlier as making updates to the modules easier:

Update-HPiLOModuleVersion

The help for this command indicates that it will checks to determine if a newer version of the HPiLOCmdlets is available on the HP website for download. If there is it will open a new browser to download it.

Since this is the first release to include the update cmdlet there is currently nothing for it to do. I referred earlier to the navigation often required to find something on the HP website, and this will hopefully help. What I would in fact prefer would be for the utility to actually download the msi directly from the commandline.

Down to something more concrete and useful:

Lets use these tools to take a look at the integrated Storage Controller.

In this case I found that the G7 DL380s I have in the lab do not respond, but I do have some G8s with an HP Smart Array P420i that answer right up.

$ld= Get-HPiLOStorageController -Server $iLOIP -user $usr -pass $pass

$ld

From this view, we can see general controller status in addition to the Logical Drive(s).

$ld.LOGICAL_DRIVE

If we dig into those the Logical Drive(s), this is what we can see about each:

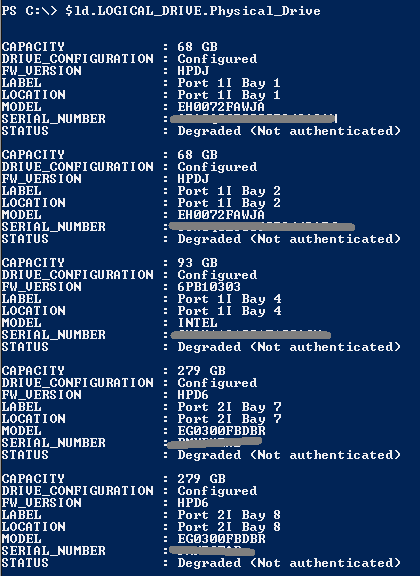

Physical Drive(s) details are also visible:

$ld.LOGICAL_DRIVE.Physical_Drive

Since publishing my first post, I’ve been chatting over Twitter with a PM @ HP about my experience with this, their first tool released for PowerShell. She assures me more PowerShell goodness is in the pipeline and I hope to try it out and share more with you here.

Since publishing my first post, I’ve been chatting over Twitter with a PM @ HP about my experience with this, their first tool released for PowerShell. She assures me more PowerShell goodness is in the pipeline and I hope to try it out and share more with you here.

Have you given the HPiLo PowerShell tools a try yet? What are your thoughts?

Along the some vein, I was inspired to try out the HP Insight Control – server provisioning tools.

Once upon a time I had tried taken a look but was discouraged by the initial installation process, including the time it would take to install an OS, the dependencies and then install the software components of the Insight Control server provisioning tools.

I found that there is now a virtual appliance available for either VMware (OVF) or for Microsoft Hyper-V (vhdx). That said, at a whopping 11GBs the download is not exactly slim! But, the time gained from not having to install and OS and al the dependencies should make it worth while.

I’ll try to post about my experience here.

One more Question:

Do you prefer the ease of vApps for downloading and running software solutions? Why or why not?

Let me know in the comments or over Twitter @kylemurley